公司发的圣诞礼物被一个自以为是的胖女人拿走了,不开心

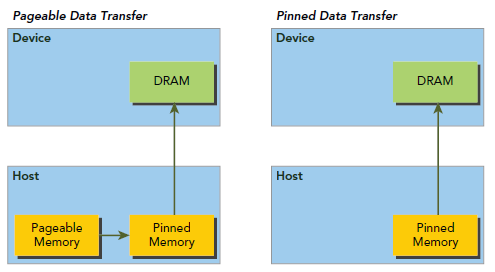

众所周知GPGPU的性能瓶颈为PCI-E传输速度,数据传输时会导致运算资源闲置。因此NVIDIA发明了一个很牛逼的技术Zero Copy,它把主机内存直接映射到GPU内存上,在GPU需要数据时直接从主机内存寻找,隐式的传输到GPU中。还有另一个技术叫Pinned Memory,会在产生一个不会被分页的内存,这块内存不会被交换到磁盘的虚拟内存上,内存地址也不会被重新定位,因此,相比普通的Pageable Memory有更高的速度。使用Pinned Memory是一定会提高性能的,不过也需要适当使用,否则太多Pinned Memory会把Host Memory给挤爆了(因为它不会分页到虚拟内存去)。

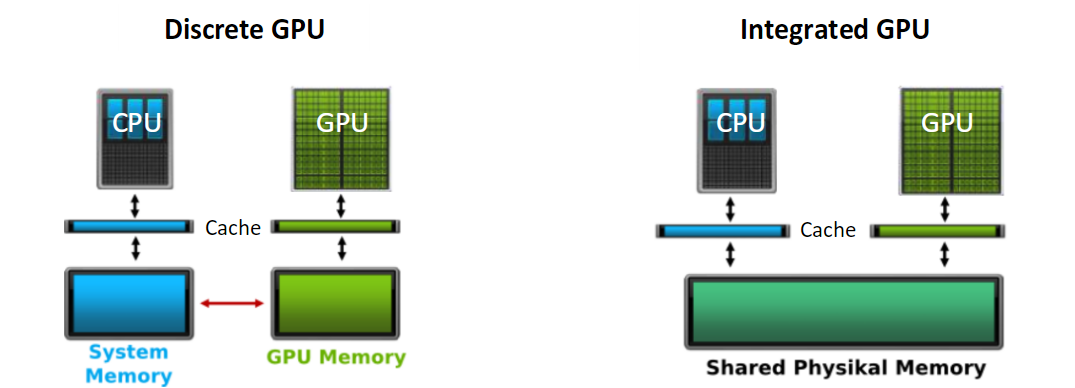

0x00 Zero Copy 对于普通的GPU使用Zero Copy以后,读取的数据速度限制为PCI-E的速度,所以不适用于频繁读取数据的程序,直到 NVIDIA TX-1(TK-1)的出现。在TX-1中,CPU/GPU共享memory(如图2 Integrated GPU,图3 更详细的展示了TX-1 的架构),使用Zero Copy的速度与cudaMalloc开辟的内存的速度一样!在这种情况下,Zero Copy会完全节省掉内存传输时间,特别对于流媒体的应用效果显著。

但是事情真的像我们想像中的这么完美吗?

Zero Copy不通过GPU缓存直接从内存中读取数据(图4),没有缓存的后果显而易见,有些时候(比如反复读取同一块数据)反而会导致性能下降。来自nvidia devtalk的帖子[1 ,2 ]解释了这个问题,文献[3 ,4 ]做了详细实验。

0x01 Zero Copy 的 CUDA 实现 1.标准的CUDA Pipeline:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 // Host Arrays float* h_in = new float[sizeIn]; float* h_out = new float[sizeOut]; //Process h_in // Device arrays float *d_out, *d_in; // Allocate memory on the device cudaMalloc((void **) &d_in, sizeIn )); cudaMalloc((void **) &d_out, sizeOut)); // Copy array contents of input from the host (CPU) to the device (GPU) cudaMemcpy(d_in, h_in, sizeX * sizeY * sizeof(float), cudaMemcpyHostToDevice); // Launch the GPU kernel kernel<<<blocks, threads>>>(d_out, d_in); // Copy result back cudaMemcpy(h_out, d_out, sizeOut, cudaMemcpyDeviceToHost); // Continue processing on host using h_out

零拷贝的CUDA pipeline:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 // Set flag to enable zero copy access cudaSetDeviceFlags(cudaDeviceMapHost); // Host Arrays float* h_in = NULL; float* h_out = NULL; // Process h_in // Allocate host memory using CUDA allocation calls cudaHostAlloc((void **)&h_in, sizeIn, cudaHostAllocMapped); cudaHostAlloc((void **)&h_out, sizeOut, cudaHostAllocMapped); // Device arrays float *d_out, *d_in; // Get device pointer from host memory. No allocation or memcpy cudaHostGetDevicePointer((void **)&d_in, (void *) h_in , 0); cudaHostGetDevicePointer((void **)&d_out, (void *) h_out, 0); // Launch the GPU kernel kernel<<<blocks, threads>>>(d_out, d_in); // No need to copy d_out back // Continue processing on host using h_out

0x02 Zero Copy 的 OpenCV 实现 1. OpenCV 3

OpenCV3可以使用cv::cuda::HostMem来使用ZeroCopy和Pinned Memory,

PAGE_LOCKED: sets a page locked memory type used commonly for fast and asynchronous uploading/downloading data from/to GPU.SHARED: specifies a zero copy memory allocation that enables mapping the host memory to GPU address space, if supported.WRITE_COMBINED: sets the write combined buffer that is not cached by CPU. Such buffers are used to supply GPU with data when GPU only reads it. The advantage is a better CPU cache utilization.

详细参见:cv::cuda::HostMem Class Reference

2. OpenCV 2

Regular cv::gpu::GpuMat

从这抄了一段代码https://github.com/Error323/gpumat-tk1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 #include <iostream> #include <vector> #include <opencv2/opencv.hpp> #include <opencv2/gpu/gpu.hpp> #include "timer.h" #define ITERS 100 using namespace cv; using namespace std; void compute(gpu::GpuMat &in, gpu::GpuMat &bgr, gpu::GpuMat &out) { cv::gpu::demosaicing(in, bgr, cv::COLOR_BayerBG2BGR); cv::gpu::resize(bgr, out, out.size()); } int main(void) { int w = 4608; int h = 3288; int wnew = 800; int hnew = 600; Mat in(h, w, CV_8UC1); Mat out(hnew, wnew, CV_8UC3); gpu::GpuMat d_in; gpu::GpuMat d_bgr(h, w, CV_8UC3); gpu::GpuMat d_out(hnew, wnew, CV_8UC3); double t = GetRealTime(); for (int i = 0; i < ITERS; i++) { in.setTo(i); d_in.upload(in); compute(d_in, d_bgr, d_out); d_out.download(out); } cout << "Old Time: " << GetRealTime()-t << " (" << cv::sum(out)[0] << ")" << endl; gpu::CudaMem c_in(h, w, CV_8UC1, gpu::CudaMem::ALLOC_ZEROCOPY); gpu::CudaMem c_out(hnew, wnew, CV_8UC3, gpu::CudaMem::ALLOC_ZEROCOPY); d_in = c_in.createGpuMatHeader(); d_out = c_out.createGpuMatHeader(); out = c_out.createMatHeader(); t = GetRealTime(); for (int i = 0; i < ITERS; i++) { d_in.setTo(i); compute(d_in, d_bgr, d_out); } cout << "New Time: " << GetRealTime()-t << " (" << cv::sum(out)[0] << ")" << endl; return 0; }

0x03 参考 [1]OpenCV Performance TK1 [2]Regarding Usage of Zero Copy on TX1 to improve performance [3]PPT: General purpose processing using embedded GPUs: A study of latency and its variation [4]An Evaluation of the NVIDIA TX1 for Supporting Real-timeComputer-Vision Workloads NVIDIA Tegra TK/X 系列板子的零拷贝 (zero copy) 问题 Cuda锁页内存和零复制 CUDA零复制内存 CUDA学习笔记九 CPU和GPU内存交互